Amazon SageMaker

Amazon SageMaker is a fully managed machine learning (ML) service that allows developers and data scientists to build, train, fine-tune, deploy, and monitor ML models at scale.

Key Use Cases

- Training/fine-tuning ML and deep learning models

- Hosting models for inference (real-time/batch)

- Automating ML workflows (MLOps)

- Experiment tracking, debugging, and monitoring

- Deploying pre-trained foundation models (LLMs)

SageMaker does not train a full LLM from scratch (e.g., from token-level data) unless you set up the massive infrastructure and code needed. Training a foundational LLM like GPT-3 from scratch requires thousands of GPUs and petabytes of data, which is typically done by AI labs or hyperscalers.

Instead, SageMaker is commonly used to fine-tune or deploy open-source LLMs or use Amazon's own pre-trained models (via JumpStart).

Common Scenarios

| Scenario | SageMaker Role |

|---|---|

| Fine-tune a BERT or LLaMA model | Yes |

| Train a model from scratch | (for small to mid-size models) |

| Train GPT-3-like models | Not practical (use custom infra) |

| Host a chatbot backend model | Real-time inference endpoint |

| Perform batch predictions | Batch Transform |

Core Components of SageMaker

- SageMaker Studio

- Web-based IDE for end-to-end ML

- JupyterLab interface to write, train, test models

- Supports notebooks, experiments, pipelines

- SageMaker Pipelines: CI/CD for ML workflows (MLOps)

- SageMaker Training: Train models using

- Built-in algorithms

- Pre-built containers (Hugging Face, PyTorch, TensorFlow)

- Bring Your Own Script (BYOS)

- Supports distributed training, Spot Instances, automatic model tuning (Hyperparameter Optimization)

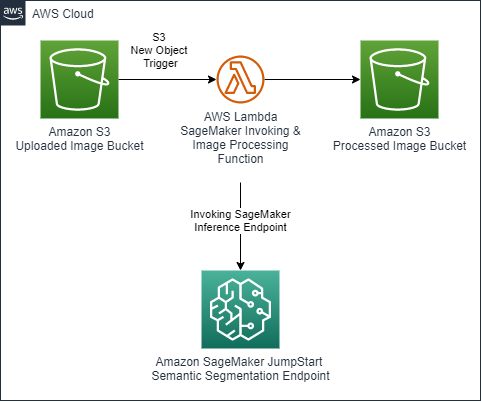

- SageMaker JumpStart

- Access pre-trained models (e.g., LLaMA, Falcon, GPT2)

- Deploy or fine-tune models easily with templates

- Supports foundation models from Amazon and Hugging Face

- Other components

- SageMaker Inference Options

- SageMaker Experiments

- SageMaker Model Registry

- SageMaker Debugger & Monitor

- SageMaker Autopilot